Automation, Me, and You

Society has historically bent to the whims of progress, though that definition, depending on who you are, is subjective. For this newsletter, I'm specifically examining progress in a technological sense and what happens to society and its people when they embrace the benefits of automation. If one society does not progress in this way, another will, and so on, that doesn't necessarily mean that society will die but be "left behind" as the rest of the world moves forward on the global stage and theatre of the games we human beings play.

Some days I think being left behind, given the subject of the newsletter this week, wouldn’t be so bad.

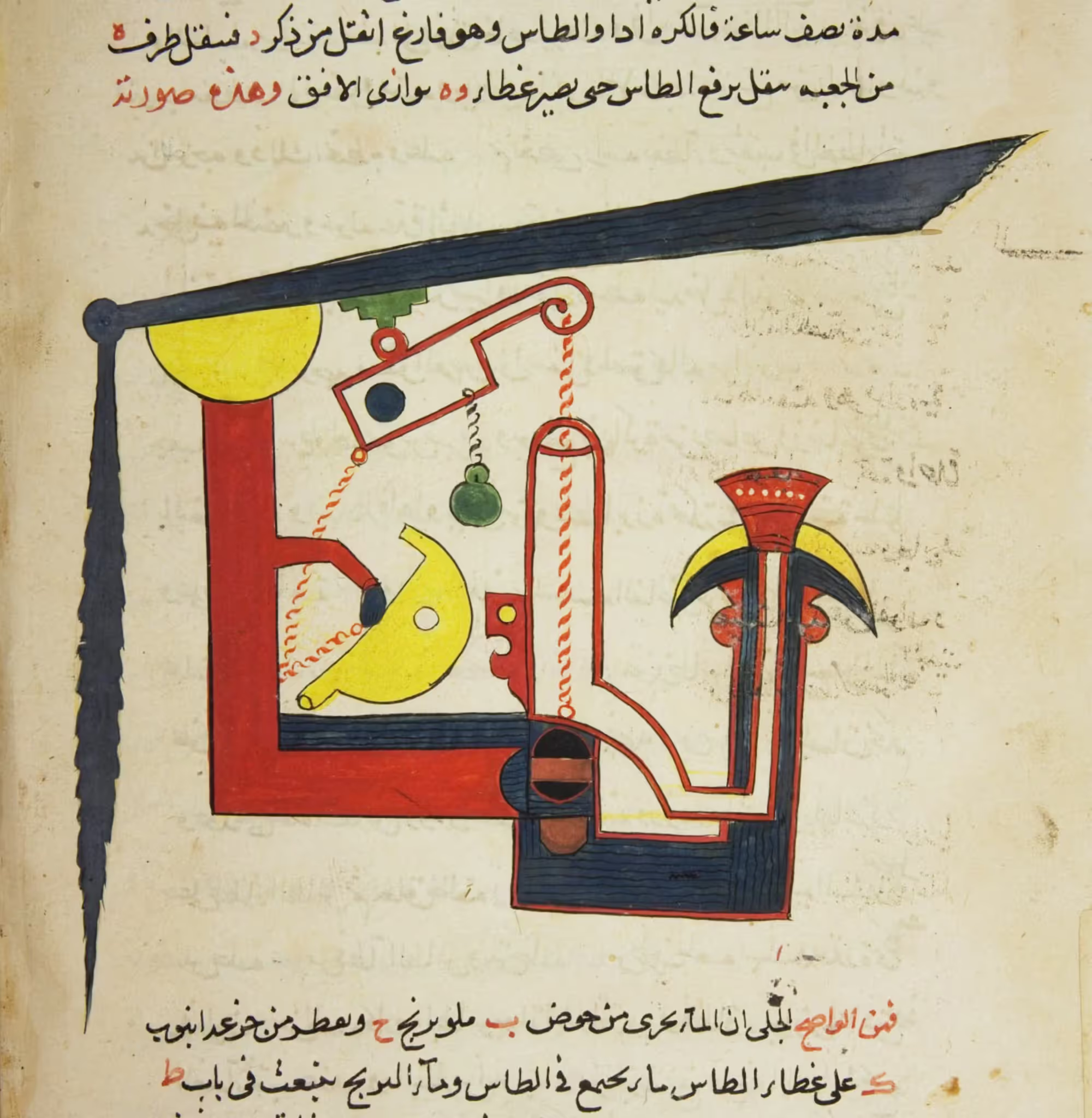

The first known automated machines appeared in ancient Greece with the water clock, a clever device used to control the flow of water and measure time automatically, negating human intervention once set in motion. Now, think about the poor soul who, one day, going about their day, earning their living and minding their own business, hoping to provide for their family, gets a tap on the shoulder and is told that some new invention would be replacing them. Terrible. What a shame. What are they to do? And this was going on as far back as 3,200 - 2,500 years ago. No one, whether you're in ancient Greece or some modern-day high rise tucked away in a shoddy cubicle, wants the water clock moment to happen to them, but it does all the time.

If you've been paying attention to AI news, the tech sector and Wall Street appear to be on one side, while mostly everyone else is on the other - profit and progress versus the many who will suffer due to the inevitable progress that is certainly coming. Similar to those who invented the water clock, AI (a term that could easily be switched out with automation) is on an inevitable path of progress. Doesn't it feel like there's no stopping it? I was young when the internet came out, but to those who were, does it feel different? If you examine the Old Testament, this moment is loosely associated with the idea of replacement as an act of restoration. But restore to what end? And for who? And, as one of my great writing professors advised me on the importance of cutting a manuscript, "What will be gained by doing so, and what will be lost?"

Because what is at stake if these AI-obsessed technocrats-turned-disciples of AGI (their pseudo God), besides jobs, privacy, and sanity is, well, everything.

In this newsletter:

San Francisco's Mayor Lurie Has a Plan

(And it Involves Rich People and the Likely Death of Your Privacy)

Anthropic's AI Blog and Me

Art for All

San Francisco's Mayor Lurie Has a Plan

(And it Involves Rich People and the Possible Death of Your Privacy)

Take San Francisco's new multi-millionaire "man of the people" mayor Daniel Lurie who recently spoke at the Bloomberg Tech conference and continues to rally behind his plan to reinvigorate the city by courting one crowd he knows best: wealthy residents, executives, big tech, and banks.

If you don't know Mayor Laurie or my slight disdain for him, I won't get into it now. Instead, check out this post to get an idea. Lurie's plan is to continue tapping the wealthiest people in the land to revitalize San Francisco to invest in schools, arts and culture, and homelessness. Sounds great but, what irks me about these efforts isn't what the money is being put towards but who is supplying the cash. Rich people, as far as I know them, rarely do anything for free. That's why they're rich. SFist in early May reported that the Breaking the Cycle Fund with Lurie's help raised "...$37.5 million — $11 million of which comes from the foundation he started nearly a decade ago to address homelessness." Who is throwing down on these revamps? The big guns include "...the Charles and Helen Schwab Foundation ($10M), billionaire Michael Moritz's Crankstart Foundation ($10M), Keith and Priscilla Geeslin ($6M), and the Horace W. Goldsmith Foundation ($500,000)."

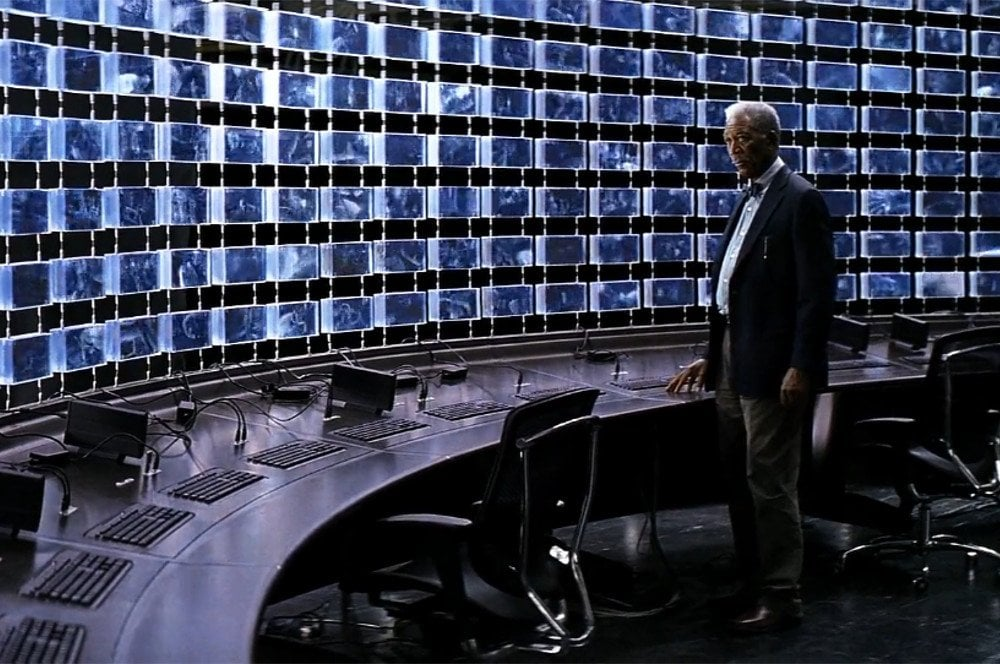

There are plenty of examples. Take Mayor London Breed's involvement with Chris Larsen, executive chairman of Ripple Labs. Aside from Ripple's association with the crypto token $XRP and the raving madness that is the XRP army, Ripple is an American tech company based in San Francisco specializing in enterprise "blockchain solutions,” mostly in finance and banking. Larsen has been involved with San Francisco, its infrastructure, and its politics for years, reportedly paying ethics fines and attorney fees for Mayor Breed in 2021 after she broke campaign financing rules. He spent $600,000 supporting Measures E and F in March 2024, both of which were placed on the ballot by Breed and passed. What's interesting about Measure E, and really circles back to the dangers of automation - in this specific case, AI, drones, and surveillance - is Larsen's peculiar fixation on funding San Francisco's police department. So far, Larsen has donated $14.4 million to the San Francisco Police Department, including $9.4 million for an upgrade to the Real-Time Investigation Center, which was relocated from the Hall of Justice to Ripple's vacant office space at 315 Montgomery Street.

Lurie mentions this at the beginning of the above video.

What's curious to me and should worry everyone is why this billionaire worth between $7-$8 billion is showering Lurie with cash and leases worth $2.3 million (for a space Ripple isn't even using), as protos.com reported, "...used to purchase 12 drones, various software, and parking fees." There is also apparently a giant "surveillance screen" in the Real-Time Investigation Center that literally looks and acts like that giant screen in that scene in The Dark Knight.

"Beautiful..." Morgan Freeman's character Lucious says, "unethical...dangerous."

They've stated car thefts and burglaries are down along with more than 500 arrests by using what's being described as "real-time monitoring tech around the city from live data from drones, surveillance cameras and automated license plate readers", according to CBS News." Electronic Frontier Foundation, a leading nonprofit organization dedicated to defending civil liberties in the digital world based in SF since the 1990's, isn't buying it believing, like many others, more surveillance is not the answer.

They released a statement saying, "...the current crime fall the SFPD is taking credit for is happening nationwide, even in cities that have not eroded democratic principles in order to put drones over the city and a camera on every block."

But really...automated crime prevention funded by billionaires whose headquarters are located in their business buildings...what could go wrong?

Anthropic's AI Blog and Me

TechCrunch recently reported on Anthropic, a San Francisco based AI company (backed by Amazon, Google, Salesforce Ventures and Cisco Investments, the US Military) launching Claude Explains, a new "blog" that generates copy/blogs written by the company’s AI model family. Claude's blog covers everything from codebases, creative writing, data analysis, and business strategy which requires "...human expertise and goes through iterations." Their AI’s aim is to "augment their work" according to one, nameless mysterious spokesperson. This move appears to be another variation of automation that I, other writers, and professionals in various sectors will continue to encounter with varying degrees of worry, dread, and anxiety looming over us as expediency and online visibility become growingly obligatory.

I never got into blog writing when it was big in the early to mid-2000s. Graduating high school in 2006, I was writing but not in any professional capacity. Travelogues, terrible poetry, and making mixtapes for girls I had crushes were my thing. Blogs are very much around, but they have mutated and turned to other mediums of digesting "content": podcasts, SubStacks and newsletters, YouTube Twitch, TikTok/Instagram channels, and on and on. AI automation is also being introduced to these mediums. For example, Wired recently wrote that you can now build a podcast with Gemini 1.5 Pro and NotebookLM. The trade-off, like we're seeing with increased surveillance due to a fall in SFPD's numbers over the years, is what's most interesting to me here. If we are at a place where we start writing all blogs with AI on any given subject, perfectly designed for the algorithms for which readers search for them, how then can any human-only piece compete? They can't. They eventually have to automate just like major brands and political parties had to turn to TikTok to capture, for example, the youth vote. A16Z believes that Generative Engine Optimization (GEO) is the future, which, if true, means that the source— written by both humans and AI— the AI chooses to reference or summarize will take priority over ranking high on a Search Engine Results Page (SERP). Why wouldn't the AI, trained solely by the company that owns it, choose it's own AI-written content/blog to then summarize?

I would be a hypocrite if I didn't admit that I too use these tools for myriad reasons: news updates, research, light editing, and even looking up words/phrases that I can't find. AI and large language models, in some circumstances, are useful. They expedite tasks. They do what they say and then some, so much so that universities are already implementing them in undergrads required learning. AI is here, like the printing press, like the personal computer, like the internet, like the smart phone. To that end though, haste has no place, unlike finite games, in infinite games like art, which writing, literature, poetry, etc. has a firm place in with no defined endpoint. When it does, you can feel it enter the finite.

These AI companies and their models train them in very specific ways. This training, like Google search, like Meta or TikTok’s algorithm, can be tweaked and manipulated to include what a company wants and what they don’t. Today, their models produce seemingly fair, democratic outputs, claiming organic selection over paid ads but, if history can be our guide, "enshittification" it is not a matter of if, but when. When that happens, we can expect these companies to lean on their power and the liabilities we paid for them to have and utilize and turn their once optimistic spin of "collaboration" into something more nefarious given the amount of productivity and "advantage" it allowed companies to get ahead. This is what I’m worried about because I’ve seen it happen over the course of my life most evidently with social media.

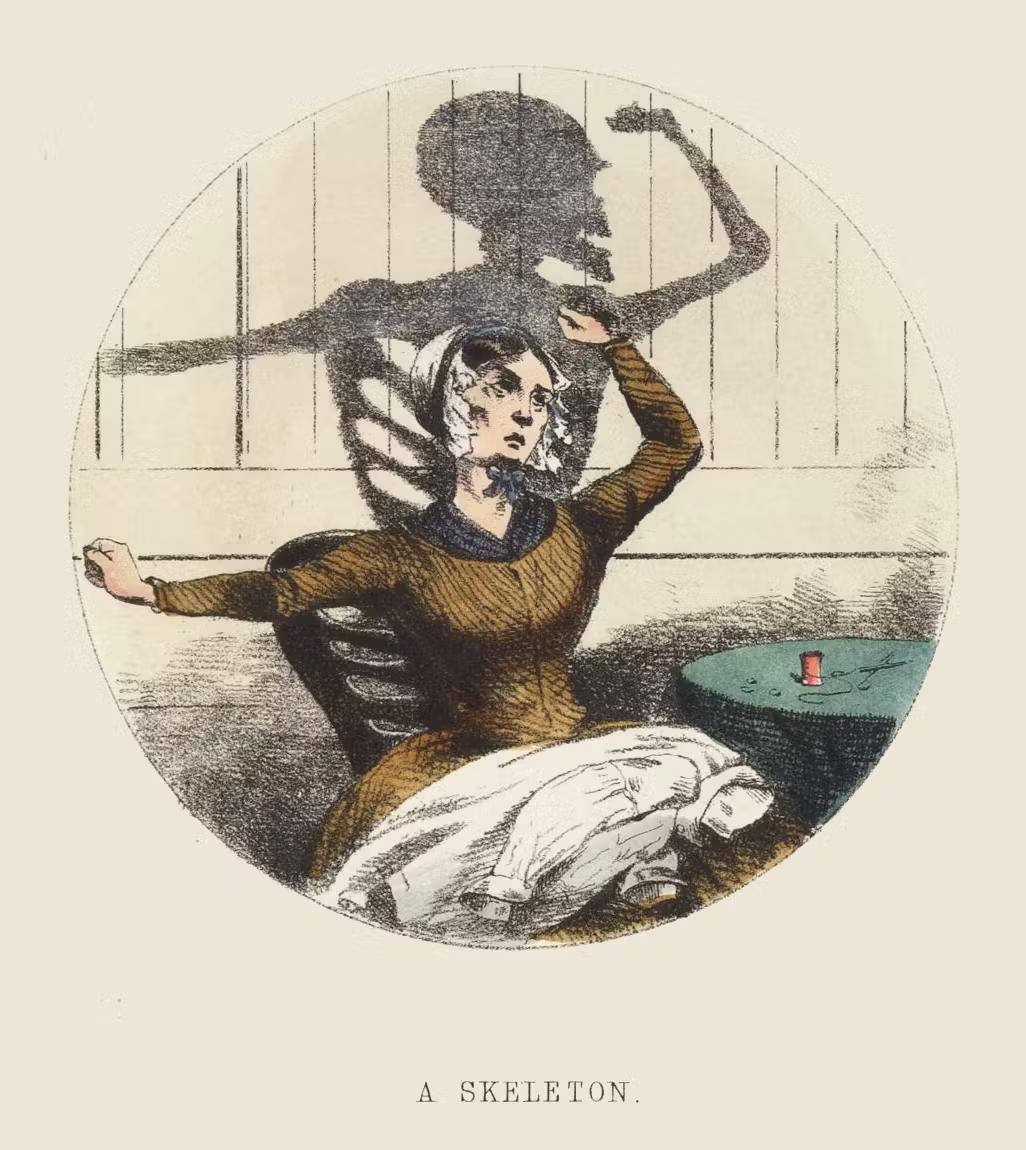

In the context of blog posts and, more generally, the written word, the effects and repercussions of censorship for ensuring power are nothing new; we're living in it today.

Art for All

Art is exploration, discovery; a path of means upon mediums to present and show stories of all people throughout. To be an artist and work on one’s art is to be - not to sound too self aggrandizing - a conduit to the energy of one’s time to transmute into something the people in their time can attempt to understand to hopefully provide deeper context of today and all time. The problem is not human beings desire to create for betterment of humanity (it is necessary in any living breathing successful society and if you start to see power try to control any mechanism of creativity in society well…you have a problem) but under the umbrella and within the scaffolding of capitalism/markets, it is expensive.

Additionally, there is the pressure of "making it," finding your "niché," and sacrificing one's protean ways to adhere to a fixed state to be allowed to enter the present market. The cost, of keeping the artist alive to the essential materials to produce the art, to where it's presented, etc. is no joke. Living at it since I left home at 17-18, being what you are, doing what you do, while trying to make enough to survive is...no small task. Living and trying to enjoy life with vice while creating anything would make anyone insane. Which is why, if you ever come across one, one that’s survived and still at it, they are a bit mad hatter. Plus, even if you do get it out there, there is the risk of the public not even supporting it to take the time to experience it. Art is and will always be a gamble, which is what makes the life, the act, and the end result so dangerous and valuable. Nature is art's spawn and being of its seed, contains qualities of its source: beauty, harmony, and chaos. If captured and confined, both can and will go on changing the world.

If not, if art dies, we will die along with it.

Enter tech, automation and, AI, a tool for productivity and efficiency. Like Mayor Lurie‘s AI, drones, and surveillance around San Francisco (something Governor Newsom ok’d back in 2024); like Anthropic‘s AI-generated blog posts collaborated with AI, it is not easy to argue implementing a new tool for good things, especially when, if everything goes according to plan it would end up 100% benefiting humanity. As always, there is a catch; something gained, something lost.

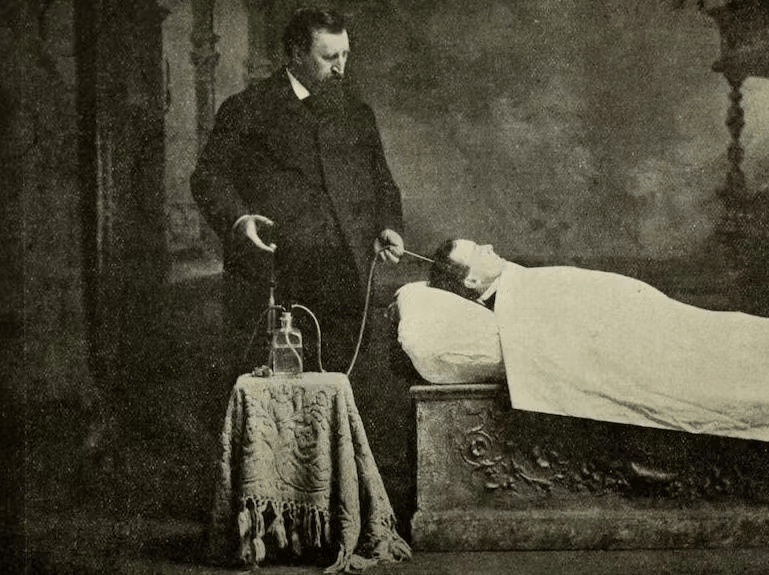

Take the video above, which is a scene of a 3D printer laser from Monumental Labs in the process of something called "stone cutting." Regardless of what these statues are used for, either for some toga party in LA or an apartment owner obsessed with marble statues that perhaps can’t afford the real thing, the automation - the laser, the blueprint, the software, etc. - is replacing certain aspects of the sculptor. There are plusses and minuses to this as you can see and read below.

My question is: what exactly is gained and lost? There are many answers depending on who you ask. If you were to ask the sculptor, based on a comment on I read in the comments section, they claim to have "...much wider freedom in finishing than before. I have more time and energy for details, to play with textures and to enjoy what I do." So, more time allows for more variety. Ok.

For the viewer knowledgeable about CNC milling of stone, what effect does it have on their appreciation of the statue? Is how they see it, value it, or absorb it changed? Expand this question to AI-generated writing, even fiction. If the reader doesn't know AI created it but still has the same diverse effects, is there a difference? Was the same question poised when the typewriter was invented and commercialized as everyone switched from handwriting stories to typing them away? If the AI stories "do" the same thing, who then, in that society, is no longer needed, and who is? Today, there is a lot of talk and assurances that we, the humans, will always be guiding the pen or, in the case of the statues, the laser, but what if that changes? What if the consumer no longer cares and wants what they want, regardless of the creator? No matter the source? Will companies honor their original workers, their original "humans" over their profits? I'm going to say no.

This point follows a trend I've noticed more and more involving AI in business as well as the art world: collaboration and enhancement. For the consumer, as is everything in a market, their choice whether to buy a statue void of AI and lasers or not, similar to people that buy whatever bread at the store compared to the artisan baker down the street, comes down to cost. Once again, art, "real" art, is expensive, which Monumental Labs may be trying to address by increasing supply (I'm not even sure they're doing that), but inevitably affecting its significance.

For the greater culture, I'm curious what this "robot-aided" period will bring. Will we be better off? Worse off? Or will this transition be so gradual and seamless, like many aspects of life, that we won't notice until one day when we look back and feel nostalgia while also being grateful for the life we have?

Hearts and fists go out to Los Angeles, California and any other state going through these fascist, intimidation tactics from the current administration and ICE.

Solidarity through unity. Unity through solidarity. Solidarity Forever.

Member discussion